Overview

This page aims to demonstrate the capabilities of the well-known Bidirectional Encoder Representation from Transformers (BERT) model with two Bidirectional-LSTM layers, a fully connected layer, a drop out layer, and a classification layer for a classification task, specifically the popular Kaggle Competition Natural Language Processing with Disaster Tweets. The use of this architecture resulted in achieving a top 4% score on the leaderboard. To further illustrate the reliability of this model's predictions, we will present 10 randomly selected predictions, and explain their how did the model decided to their class using Local Interpretable Model-Agnostic Explanations (LIME).

Introduction

In the pursuit of finding a model that achieved a top 4% score for the Kaggle's Natural Language Processing with Disaster Tweets competition, we conducted a search. The dataset utilized in this competition is a CSV file containing an id column, which serves as a unique identifier for each tweet, a text column with the tweet's text, and several other columns not utilized in the training process. Further information regarding the dataset can be found in the "Data" section of the competition's page. It is worth noting that the discussion on this page pertains specifically to the training dataset.

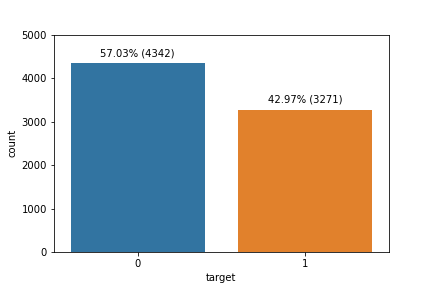

The current dataset consists of 7613 tweets, with a range in length from 1-31 words, and a character count range of 7-157 characters. These tweets also may contain hashtags, mentions, and links. The tweets are classified into two categories: real disaster and not real disaster, with the distribution as follows:

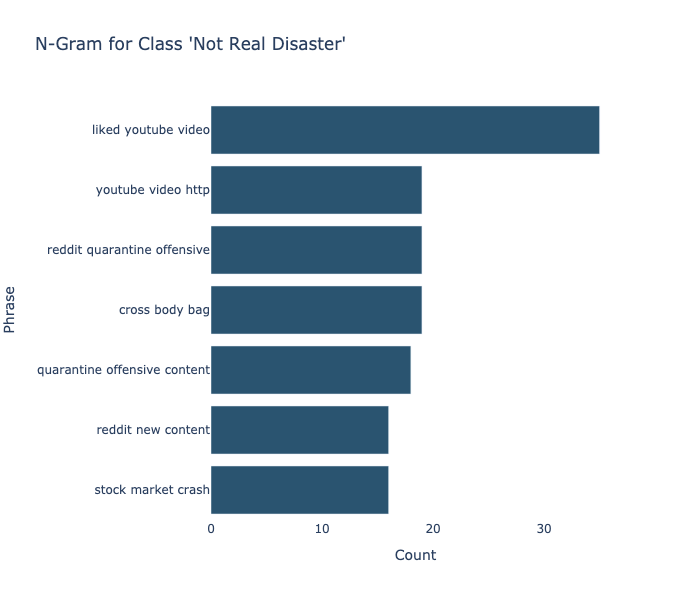

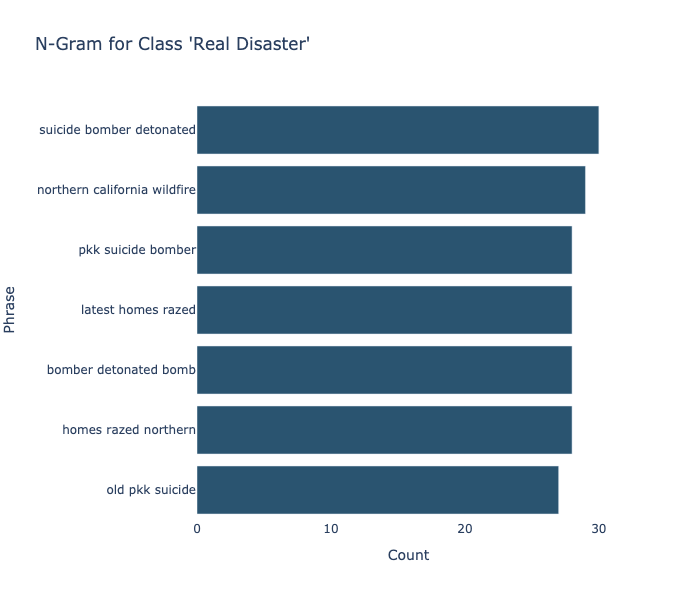

Below, we can see the n-grams for both classes. An N-gram is a group of N consecutive words (three in Figure 2) that can be either a long chunk of text or a shorter collection of syllables. N-Gram models utilize sequence data as input and generate a probability distribution of all potential items. From this distribution, a prediction is made based on the likelihood of each item. In addition to next-word prediction, N-Grams can also be utilized in language identification, information retrieval, and DNA sequencing predictions.

To classify the dataset, we employed the use of Bidirectional Encoder Representations from Transformers (BERT). BERT is a model that has been designed to achieve state-of-the-art results upon fine-tuning on various tasks including classification, question answering, and language inference. In the design of a language model, it is crucial to consider the tasks the model will be attempting to perform. In the case of BERT, the model is attempting to predict masked tokens as well as determining whether sentence B subsequent to sentence A. For a more comprehensive understanding of this model, one can refer to the original research paper.

Model Architecture

The pre-trained BERT model can be fine-tuned with the addition of a single output layer. However, in our case, a more complex architecture was chosen. First, the text was tokenized and masked as described in the original paper, and then the sequence output of the model was captured and fed into a bidirectional-LSTM layer with 1024 units and a dropout rate of 90%. This layer produced a sequence output, which was then fed into another bidirectional-LSTM layer with the same hyperparameters. The pooled output of the later layer was passed through a dense layer with 64 hidden units and a ReLU activation function, followed by a dropout layer with a rate of 20%. Finally, the weights were passed to the output layer, which contained two units and utilized the Softmax activation function.

For this model, the uncased large BERT model from TensorFlow Hub was utilized, featuring 24 Transformer blocks, a hidden size of 1024, and 16 Attention Heads. Additionally, the output layer used two units and a Softmax activation function rather than a single unit and a Sigmoid activation function, allowing for the use of the model's predict method in Local Interpretable Model-Agnostic Explanations (LIME).

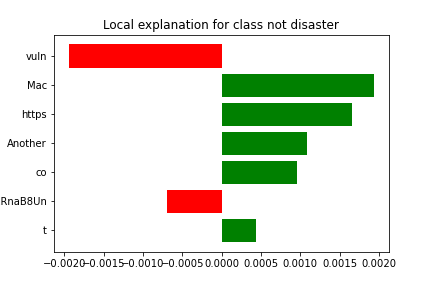

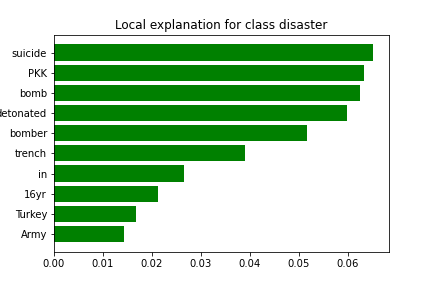

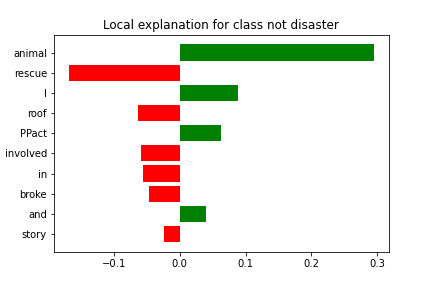

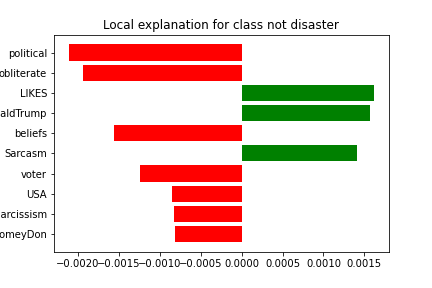

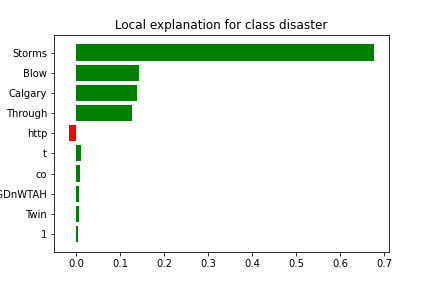

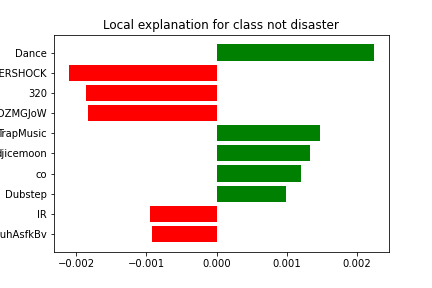

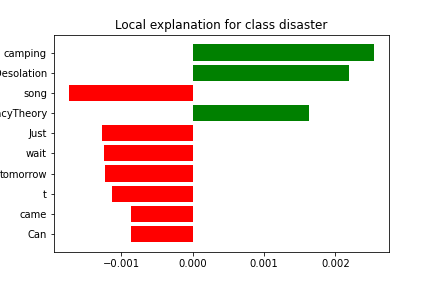

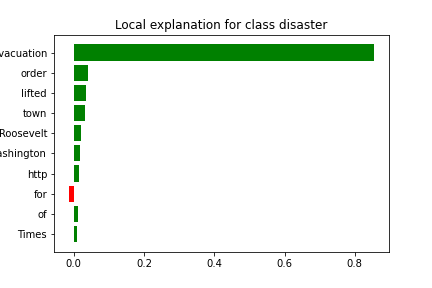

Explain Sample Tweets

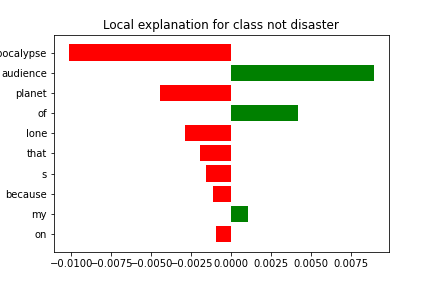

The subsequent carousel displays a number of randomly selected samples in which the model attempts to predict the class of the tweets. For each sample, the original tweet, the top words that influenced the model's decision, the predicted and true labels of the tweet are presented.

Conclusion

Upon analyzing the model's predictions on a selection of randomly chosen tweets, we can consider this model to be a reliable tool for this task. To further increase the reliability of the model, we recommend removing URLs from the tweets as a potential strategy.